How to Detect Bot Traffic Draining Your Budget

Table of Contents

Takeaway: If you’ve read our breakdown of how bot traffic silently inflates Acquia hosting costs, you already know that not all traffic is created equal—and some of it is quietly eating your budget alive. This guide is your next step.

The question is no longer “Could this be happening to me?” but “How do I prove it, and what can I do right now to get it under control?”

- We’ll show you how to confirm the problem in your own environment.

- You’ll learn how to identify the automated activity your analytics don’t see.

- And you’ll set the security foundations that make advanced bot protection far more effective.

By the end, you’ll know whether bots are your real budget culprit—and be ready for the final step: Keeping them off your infrastructure for good.

This blog is part of our Drupal bot protection playbook. Download your copy now. ⤵️

Download the Drupal bot protection playbook

Recap: The analytics blind spot that's draining budgets

Most IT leaders rely on Google Analytics to understand their website traffic, but this creates a dangerous blind spot when it comes to hosting costs. Here's why:

What your analytics show:

- Human visitors who load JavaScript and accept cookies

- Engaged users browsing multiple pages

- Clear geographic and demographic patterns

What your infrastructure actually serves:

- Every HTTP request that reaches your web server

- API calls from automated systems and integrations

- Security scanners probing your site structure

- Sophisticated bots that bypass basic detection methods

- Requests that bootstrap your application without loading full pages

This fundamental mismatch explains why your hosting reports might show concerning activity while your user analytics appear completely normal.

Your server infrastructure processes and responds to all requests—human or automated—but your analytics only capture a fraction of that activity.

The real-world impact of uncontrolled automated traffic

Let's say you're a state & local government agency or a higher ed institution. Uncontrolled bot traffic creates:

- Unpredictable hosting costs that complicate annual budget planning

- Performance degradation during critical public service periods

- IT resource waste troubleshooting phantom traffic spikes

- Budget uncertainty that forces difficult infrastructure decisions

What to look for to check automated traffic

Before you can address automated traffic issues, you need visibility into what's actually happening on your server. The disconnect between your analytics and infrastructure usage often indicates that automated systems are accessing your site in ways that traditional monitoring doesn't capture.

Server log red flags

Your server logs contain the complete picture of requests hitting your infrastructure. Look for these patterns that suggest automated activity:

- Repetitive Access Patterns: Identical user agents making systematic requests to specific pages

- Resource-Intensive Targeting: Automated systems focusing on search results, directories, or data-heavy pages

- Suspicious Timing: Traffic spikes with no correlation to known events, campaigns, or announcements

- Geographic Anomalies: Unusual request volumes from regions unlikely to have legitimate interest

Infrastructure-level warning signs

Modern automated systems often use sophisticated techniques to appear legitimate. Watch for:

- High-Frequency, Low-Engagement Patterns: Multiple requests with no meaningful interaction indicators

- Systematic Crawling Behavior: Methodical progression through URL structures or parameters

- Non-Standard Access Paths: Requests bypassing normal user flows or hitting unusual endpoints

- Resource Consumption Spikes: Server load that doesn't match your user activity metrics

Essential bot traffic detection tools and techniques

Effective traffic analysis requires looking beyond standard analytics. Here's your detection toolkit:

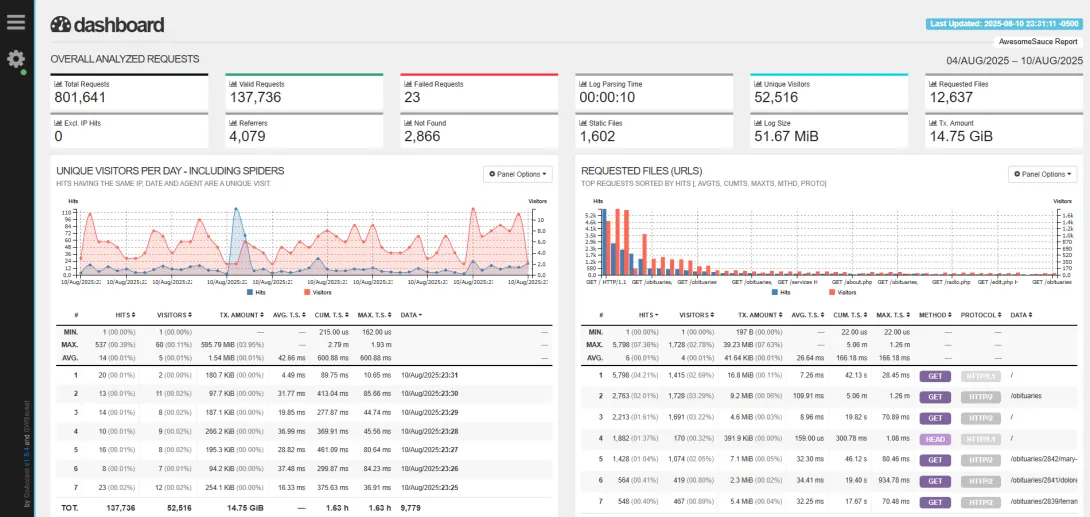

Server log analysis

- Command-line tools like GoAccess and AWK for efficient log parsing

- Focus analysis on dynamic content requests rather than static assets

- Set up automated alerts for unusual traffic patterns or volume spikes

Enhanced monitoring

- User-agent analysis to identify suspicious client patterns

- Geographic tracking with IP geolocation databases

- Request rate monitoring to spot clients exceeding normal usage patterns

- Network analysis to understand traffic sources and characteristics

Integration opportunities

Consider monitoring solutions that can:

- Track requests from specific network ranges or autonomous systems

- Provide real-time alerts when traffic patterns exceed defined thresholds

- Generate detailed reports on access trends and potential issues

- Integrate with your existing administrative workflows

Building your bot traffic defense foundation

Detection is just the first step. Prevention requires a layered security approach. Even before implementing advanced bot protection, make sure these fundamentals are in place.

Drupal security essentials

Keep systems updated:

- Implement CI/CD pipelines for regular security updates

- Use Drush or Composer for update management

- Maintain staging environments for testing

- Enable the Update Manager module for real-time security advisories

Strengthen authentication:

- Deploy Single Sign-On (SSO) with Two-Factor Authentication

- Enforce strong password policies aligned with SP 800-63 standards

- Implement role-based access controls with least-privilege principles

- Regularly audit all user permissions

Infrastructure hardening:

- Enforce HTTPS site-wide with auto-renewal certificates

- Implement daily database backups with secure off-site storage

- Configure firewall rules to restrict database access

- Enable comprehensive logging for security monitoring

Why these security foundations matter

These security practices create multiple protection layers that complement bot-specific defenses:

- Reduced Attack Surface: Each enhancement narrows bot access pathways

- Early Warning Systems: Proper monitoring alerts you to unusual activity

- Defense-in-Depth: Multiple protection layers ensure resilience

- Regulatory Compliance: Meets public sector security requirements

Understanding your traffic patterns

The key to managing infrastructure costs lies in understanding what's actually consuming your server resources. This helps you make informed decisions about hosting plans, performance optimization, and protection strategies.

Common automated traffic sources

Beneficial automation:

- Search engine crawlers that help users find your content (like Googlebot)

- Monitoring services that verify site availability and performance

- Integration partners accessing APIs for legitimate data sharing

- Accessibility testing tools ensuring compliance with standards

Resource-heavy automation:

- Data scrapers systematically collecting public information

- Security scanners probing for vulnerabilities (often from well-meaning sources)

- Research systems analyzing content patterns or structures

- Competitive intelligence tools tracking changes and updates

Infrastructure features that attract attention:

- Search functionality and filtered results pages

- Public APIs and data endpoints

- Staff directories and organizational charts

- Document repositories and file downloads

- Forms and interactive features

Making data-driven decisions

Understanding your traffic composition helps you:

- Plan capacity based on actual usage patterns rather than assumptions

- Optimize performance for your most resource-intensive features

- Budget accurately by understanding infrastructure demand drivers

- Prioritize security for your highest-risk endpoints and features

This analysis forms the foundation for any advanced protection strategy, ensuring solutions address your specific challenges rather than applying generic approaches.

Your next steps

- Investigate your current situation: Compare your hosting usage reports with analytics data over the past 6 months—look for discrepancies

- Implement traffic analysis: Set up server log monitoring to understand your actual traffic composition

- Audit security foundations: Ensure your basic Drupal security practices are solid and current

- Assess infrastructure impact: Identify which features and endpoints consume the most server resources

- Consider advanced solutions: Research specialized protection options designed for your specific hosting environment

The disconnect between user analytics and infrastructure costs is a solvable problem. Understanding what's actually happening on your servers is the first step toward predictable budgets and optimal performance.

Keep bad bot traffic off your site

Detecting and understanding bot traffic is just the start. Once you’ve confirmed the issue and secured the basics, the next step is stopping bad traffic before it reaches your Drupal site—the only way to keep it from consuming your Views & Visits quota and inflating your bill.

That’s where edge-layer protection comes in. Learn how it works and why it’s the most effective defense for Acquia and Pantheon sites in our follow-up: How Cloudflare WAF Solves Bot Problems for Good.

Stop guessing, start proving—and protect your budget from costly, invisible traffic.

For the full step-by-step strategy—including advanced rate-limiting, good bot management, and understanding our turnkey Cloudflare implementation:

Get our newsletter

Get weekly Drupal and AI technology advancement news, pro tips, ideas, insights, and more.